Introduction.

Many AI players, including Claude, OpenAI, Google, Alibaba and ByteDance (TikTok) delivered models or services for controlling computers, phones and other devices. The model of this sort looks at Graphical User Interface (GUI), and executes user command by controlling it. ByteDance opensourced model named UI TARS, with the plugin to integrate it with a browser (see here: https://github.com/bytedance/UI-TARS ), now making it easy to run autonomous agent for free, locally on own hardware.

Most of the new models are able to execute 80% or 90% of simple single turn commands (commands like “click microphone icon”, “add new slide”, “click share button” etc.). For multiturn tasks (like: “buy me pizza from the nearest restaurant on bolt”) the accuracy could be anywhere from 0% to 60%, depending on the difficulty. If the cases are strongly representative of training data, these models may indeed be very accurate, possibly helping with test automation or controlling a set of predefined apps in their common routines. Testing the agent in an unfamiliar environment often produces much worse performance: it was indeed not correct to assume that training on 1000 or more apps will support all possible ones. This is founded on another flawed assumption that logical structures of GUI are made of fixed universal rules. Such rules (“x closes app”, “magnifying glass activates search” etc) play a role, but the relevant part is also organized by a self-emergent cooperative game order that is inherently complex.

Secondly, there are other inherent difficulties: even a flawless agentic voice interface is not a good replacement for GUI, as the voice interface itself is very often less precise and harder to control and predict.

For these reasons many projects based on these systems in their present form may fail, especially if they are not planned with the awareness of limitations. This especially applies to taking for granted the promises of easy human-level autonomy and unlimited generalization, as well as disregarding inherent advantages of GUI over natural language interface.

Better data and training produce improvements.

The performance improves, showing some interesting patterns. Before late 2023 there was no multimodal large language model that would be effective on locating UI elements to click. GPT4-V scored about 16% accuracy on Screenspot. Then at the turn of the year we saw Seeclick and CogAgent: where medium-sized MLLM (9.6B and 18B respectively) were finetuned or UI location data, which produced accuracy of 55% and 47% respectively. SeeClick authors trained the model mostly on data being crawled from the internet with UI location boxes and element annotations read from HTML if available. This was of course cost-effective, but had obvious limitations.

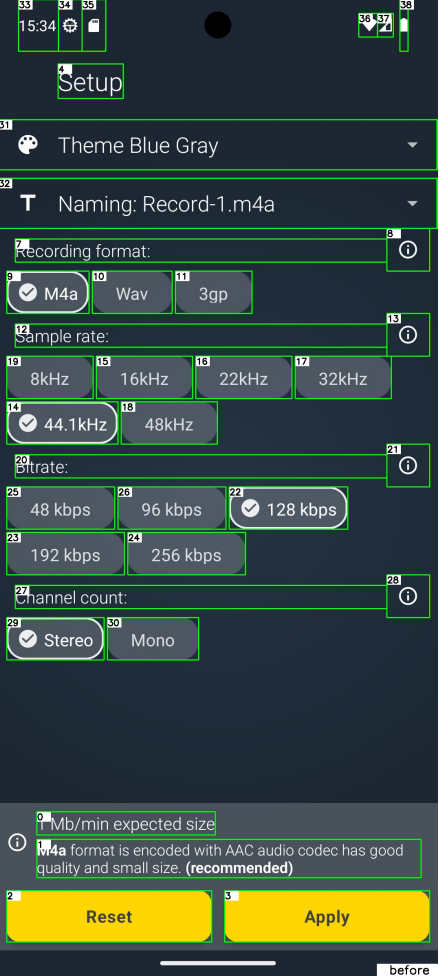

Subsequent generation of agentic models, such as Molmo, UI Tars, OS World or AGUVIS typically relied on finetuning on the better data (and large amounts of it). With use of expensive manual annotation the datasets became more diverse and accurate than data extracted from HTML, or annotated by MLLM. UI TARS, for example, claims to have sourced the data from GUI tutorials available online. Many of these models used Qwen-VL-2 as a base pretrained model, which was already trained on UI data and demonstrated similar UI element detection performance as SeeClick. Autonomous multi-turn models are often trained when interacting with the devices, allowing the model to freely explore the UIs and correct and verify its actions. In October 2024 the team I work on released TinyClick, which is a small 0.27B model, competitive with much larger LLM on single-turn commands (scoring 73% accuracy on Screenspot) – our approach included adopting the strong vision model Florence2, which possessed richer visual representation. There are different solutions too, such as these using Set-of-Mark prompting to empower generic MLLM with UI detection capabilities (most MLLM before 2025 performed poorly when asked for numeric positions, but this considerably improved recently). This works by annotating UI elements with numbers and making the model refer to those numbers in the output (such as M3A or MobileAgent-V), others relied on OCR and various accessibility annotations available in the system. Below the conceptual example of Set-of-Mark technique, taken from Rawles et al. Boxes are taken from Android accessibility annotations and annotated with numbers, so that MLLM can refer to them.

All these types of AI solutions are useful in some contexts, such as testing the frontend or crawling dynamic websites or zero code automation. These things are hard to do now, because GUIs and web pages lack a uniform interface for automated operation (and sometimes explicitly try to prevent such access).

Would you benefit by replacing your GUI with a natural language interface? Not necessarily.

Let’s assume, hypothetically, that we may have such a GUI agent with 100% single turn accuracy and very good planning skills. Would that be useful? Certainly it would, at least in some areas, like operating your phone hands free, or without looking at it. Nowadays visually impaired people use simple voice interface called TalkBack, that is in fact a simple rule based screen reader and navigation tool that allows them to move through all the UI elements. AI can transform this type of interaction.

But GUI serves an important purpose, not only to select specific functions and to interact with it but also to inform us and communicate with us more efficiently than the voice interface ever could, showing us comprehensible visual information. Also our control is more precise and rich in information, with mouse clicks positions, selections and keys typed from keyboard transferred to the program with nearly perfect precision.

If we work on slides in PowerPoint we use the mouse and keyboard to move and adjust various visual elements, seeing the effects constantly and in real time. If we order food online we instantly see available options, and whatever additional data: is the price what we expect, are there delays in delivery, is our favourite dish available with all the add-ons we want. If we edit text or source code we constantly look at it to be aware of the context. The programs often inform us of the issues such as typos or code errors.

In addition, we can very quickly learn to use the application, by looking at it and some trial and error. Icons and menus of a text editor contain visual or textual hints denoting their purpose and hovering on them with the mouse reveals additional information. This allows me to adopt various features more easily, as I am instantly aware that they exist, I am provided with the basic information, and I am able to experiment with it, to see how to use it. The voice interface contains no such mechanism, to know what is even available in the application.

Therefore, if you aim to use your application without looking at it you should know somehow, what the application can do and how to do it.

Implicit game-theoretical structure of GUI – why is it hard to generalize for the AI?

By observing how agent systems work on real-world data, we can see certain specific patterns,

These suggest in particular, that getting from 50% to 80% or 90% accuracy is much easier than getting to 96% or 98%, and it is a common pattern with other deep learning models, every time they are interacting with complex human-made environments.

Some of the agent commands are very simple for a vision-language model to learn. We know, for instance, that a magnifying glass icon denotes search functionality, that bold B denotes “bold font” and slanted I denotes “italic font”. Also there are standardized icons for sharing, playing, attachments, notifications and interactions with services like facebook or x.com. For a model to learn a command like “share on facebook”, “turn on italic font” it is enough to learn to recognize an icon or pictogram. It is also simple to train a model to find any text annotations: using deep learning for optical character recognition is well established and easy to do.

This type of training could be enough for a large part of the single turn agent accuracy, but not all of it. Consider, as an example a Youtube screenshot below. There are a bunch of buttons here, including like thumbs-up buttons, as well as three-dot buttons for the options. Most of those buttons are identical, and there is no obvious way to associate a given button with the UI element (either video or comment). Consider, for instance, a three dot button in the middle of the screen – there are five such buttons. Should it control the comment on the left or the video on the right?

It is clearly not determined by simple distance. We see however, that this button controls the comment, not the video. First of all, videos have their own identical buttons on the right.

Secondly, the webpages are arranged in rectangular structures denoted by <div> tags in the HTML, telling us implicitly which button is which. There are no visible rectangles, but there is implicit arrangement that we can see. Thirdly, there is a convention to put secondary controls below the main content (such as like or reply buttons) or to the right side of it (as we write or read left to right).

The point is: humans are extremely effective at navigating this type of conventional set of visual patterns that such a GUI is, spotting immediately that there is some non-obvious pattern that fits and explains the functionality for us. UI designers know that and use this knowledge to make the GUI that is aesthetic, but easy enough to understand and use. In fact some sort of cooperative game emerges between user and creator, where we think that creator thought that we would perform such and such actions, and he figured out some ways to make it easy and practical. We see a recommended vid and we want to watch it later. We don’t see a function to do it, but we know that the designer knows us to know that, so there should be some way to figure this out. So we hover on the video, and indeed we see a clock icon popping up, allowing us to add the video to the “watch later” list.

The apparent problem for the AI is that there is no fixed set of these patterns, but rather a very large number of possible combinations. We could train AI on youtube, twitter or facebook, but the approximate representation that is learned by the model might not be sufficient for other similar apps. This is even more important for applications that are fairly unique, such as Photoshop, Reaper (audio editor), or Jira, where mapping UI to its functionalities requires substantial expertise that could not be learned from other apps, and using these features for good effect is an additional layer of difficulty.

Long story short: while such GUI agents may be useful in some very specific areas, they can be very unreliable elsewhere, ending up as an attempted solution to problems that could be avoided altogether.

How is GUI modelling similar to self-driving, language and ARC corpus?

Issues with AI GUI agents can be framed as a special case of a more general principle. A neural network is good at approximate representation of hidden patterns in huge sets of data. It cannot however detect novel patterns as effectively as humans do. It is not effective on anything sufficiently unique.

This is a foundational problem of neural AI that is applicable to many fields. Self-driving is another similar area that deals with very complex problems. A significant part of this problem consists of human-generated rules, rationally applied to every specific situation. A common driving assistance system is the lane control, designed to keep the car in the lane, according to the horizontal marking on the road. Even such limited systems are known to be prone to dangerous malfunction. If these horizontal markings are not consistent, or system detectors are somehow disturbed, the system might end up steering the car straight to the ditch or into the opposite lane. We can find lots of incidents of this sort online. More complex systems found in fully self-driving cars are not fully-self-driving, as they rely on human employers. One of autonomous vehicle firms, Cruise was known to employ operations staff of 15 workers for every ten vehicles on average, as of 2023. Meanwhile, Tesla now is sued for serious accidents, with their car spontaneously accelerating and crashing.

The main reason for these ills is the fact that AI is different from humans, and noticeably less performant on spontaneous generalization and detection of patterns that it never saw before.

In fact, AI is fundamentally poor at it. It is great at representing data, given a huge amount of it, not the very small amount of unique, to be able adapt quickly. Therefore, most humans can learn to play Warcraft 3 in a few minutes. AI can utterly fail the 11th map of Warcraft 3 after being extensively trained on previous 10 maps. Same with the car traffic: we constantly see situations that are different from our past, but we are able to figure them out. Not by doing perfect classification, as often it is very hard. Humans are not disturbed by inconsistent or spurious signs, because analysing unique context leads them to good enough decisions. This especially applies to domains being organized by other humans, which are indeed a phenomena of cooperative game theory.

Problems with this type of complexity include also modelling human communications as well as an AI benchmark called Abstraction and Reasoning Corpus. The language could appear to consist of some logical structure, but it is impossible to ignore its implied sense. This is how we can attach meaning to alignment of subtle details in language, environment and common knowledge of the people talking.

For example (to use H. Grice’s examples) when A. tells B. “How Mr C. is doing at his job? Didn’t he get to prison yet” it is clear that the meaning is not only a question, but also a certain implied clue that is made sensible by common knowledge that A and B have. Maybe C. is a thief or maybe there is indeed some very high legal risk attached to his work. Or, when we say that “Donald Trump is an important man” we imply something different than when saying “the US president is an important man”, despite saying “logically” the same thing about the same person. I wrote more about that in my book, in Chapter 2.

These implied patterns can get very complex and unique, connecting diverse bits of information. Quite expectedly LLM are not very good at dealing with them. Another similar example of self-organization complexity is a benchmark called Abstraction and Reasoning Corpus, made by F. Chollet. Below we see an example of a problem in this corpus. The dataset consists of riddles on a square board.

Every riddle has few solved cases for demonstration purposes, as well as a single test example that the algorithm has to solve. It is important to notice that “the riddle” is already spontaneously emergent game-theoretical order. At the heart of the riddle lies a comprehensible pattern, consistent enough for a unique solution to be deduced by the test taker. That often means that there is a solution that is a perfect fit, while being also simple (simpler than other solutions). It is quite visible when we take a math exam at school: if we produce something that is nearly impossible to solve or at least ugly, then often this could be the wrong solution, because we can expect the teacher to make the exam solvable.

Abstraction and Reasoning Corpus is this type of a problem, to provide unique set of tasks that is not well represented in available data, and see how machine learning system would fare trying to decompose set of pixels into an abstract representation (lines, shapes, rules and so on) and extrapolate a solution from this representation. Neural networks initially proved to be quite poor at this task, scoring 30% or less (compared to 80%-100% human accuracy). Later up to 80% performance was reported in some LLM, particularly OpenAI – but real world relevance of the result is low, as LLM accuracy can be inflated given enough similar training data. Seeing high score on ARC we would also expect to see better performance on other original use cases, but we know that OpenAI LLM are on the same level as Claude, Gemini or Deepseek, being subject to very similar limitations as any LLM. If there is difference between Deepseek R1 and Sonnet 3.7 (which scores less than 30%) and OpenAI o3, then the difference is in some very specific technique that leverages the limited structure of ARC, by the data preparation or otherwise. Not by solving fundamental issues of LLM, in which OpenAI has no visible advantage.

Nowadays the top open ARC solution is “COMBINING INDUCTION AND TRANSDUCTION FOR ABSTRACT REASONING” paper which, scoring 57% accuracy, offers quite unique insight into the possible solution strategies. Presented approach includes the generation of many python algorithms for solving the riddles as well as ingenious training data generation strategies (based on construction of riddles by combination of basic abstract concepts), as well as ensembling and ranking the final results. It is therefore clear that this specific problem is solvable as the complexity and effort grows, but that is not necessarily very representative of yet more general, real world problems (such as robotics, GUI agents or self-driving).